Generative AI is having its moment in the sun — and it’s casting a menacing shadow over the creative industries while it does it.

Generative AI may one day be able to take on a fully-fledged designer — or it could fall flat on its originality-deficient face. But spotlighting the ways AI fails to totally replace creatives obscures how it could help them do better work, faster. So we’re shifting that gaze, through focused, practical testing with our generative AI 3-blog series.

—

You’ve made it! Welcome to the final entry of our 3-part generative AI.

So far we’ve set Midjourney, Leonardo and Photoshop Beta with Firefly integration two creative challenges: to create a mockup of a cover design for a whitepaper and to create variations of existing stock imagery.

The results in our last test were impressive. Midjourney created a set of images that clearly drew from our base picture while producing interesting and high quality variations — not that far off what you’d get from a stock library.

Photoshop Beta with Firefly integration took a different approach owing to the tighter creative ruleset it has, transposing the model into four different environments with impressive realism.

Leonardo again struggled most out of the three systems. Most notably, the model from our original image was replaced with a much younger woman — raising some questions concerning diversity and representation in AI training datasets. (Also, she had three hands.)

Now, it’s time for our final challenge – testing generative AI’s ability in creative ideation for landing page design.

Test 3: Using descriptive prompts to deliver creative artwork ideas for a landing page

A blank page is catnip to some creatives, and kryptonite for others. Using generative AI is a good way to start experimenting at speed, getting some early ideation in place to spark ideas.

One of AI’s biggest strengths is its ability to generate an infinite number of ideas from a single prompt. As a jumping-off point for creative work this has the potential to be a very useful early stage for ideation. Here, we’re asked our three tools to generate some landing page ideas, based on a specific prompt used across them all.

To note, we also incorporated the name of a designer and illustrator – Leo Natsume – to see how these systems would react to being asked to draw from a specific style. Or indeed, if they would push back.

Universal Prompt: UI Design with 3D abstract shapes, Landing page, Trending UI Color palette, by Leo Natsume, Vibrant, High Resolution.

Midjourney

Verdict:

The prompt is everything when creating UI layouts — the more specific the better. This clearly paid off with Midjourney; the color palette alone could inspire an entire campaign. Lots of fertile ideas for further exploration here.

Leonardo

Verdict:

Leonardo’s results are interesting, but feel a little generic (and not as polished) in comparison to Midjourney (at least for our tastes). Still, there are still some interesting things going on that could trigger new ideas or design approaches. The use of 3D shapes is definitely a standout feature.

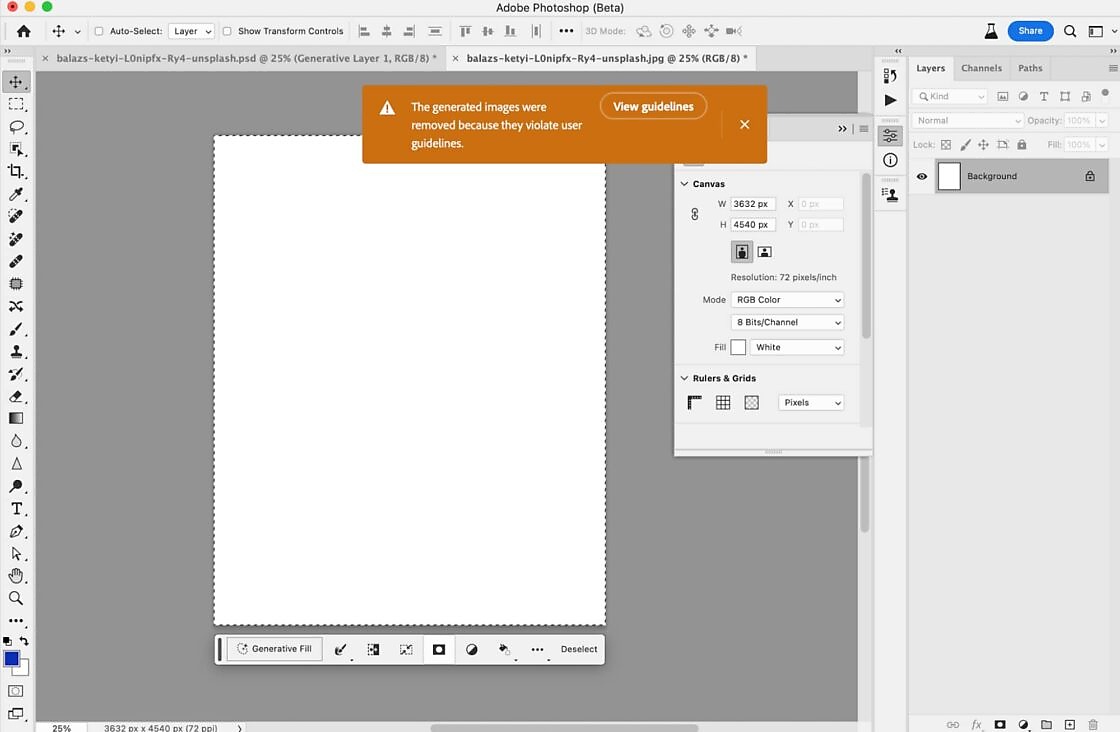

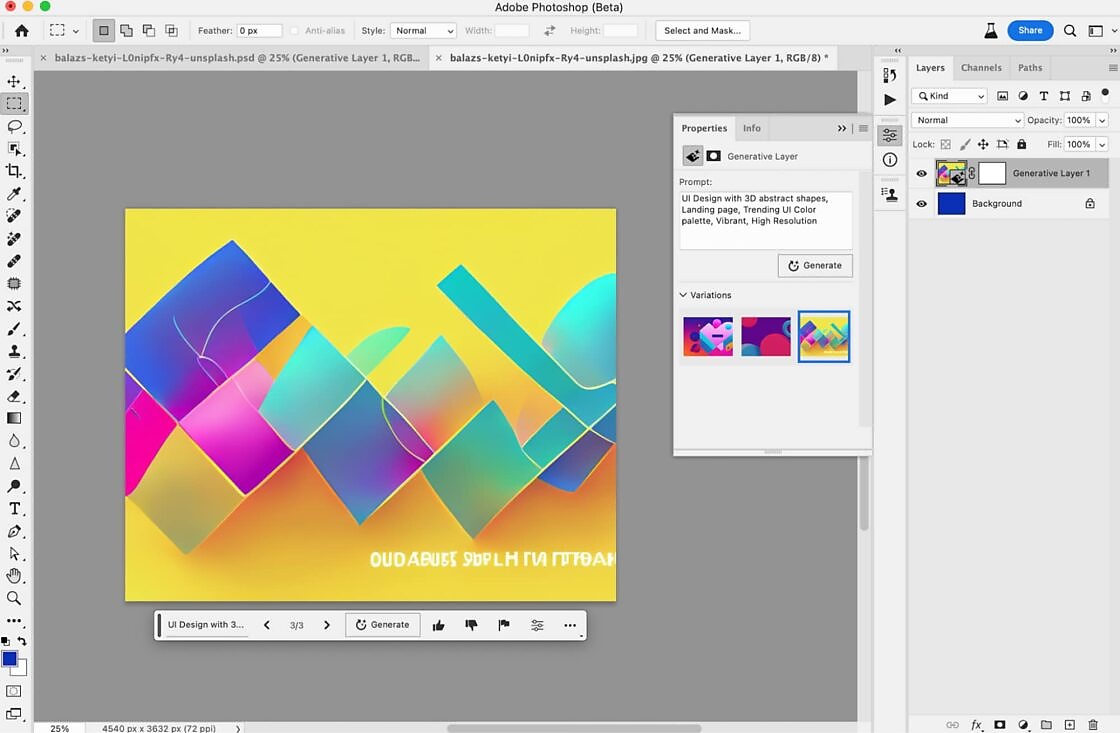

Photoshop (Beta) – using Firefly AI

Verdict:

Photoshop Beta refused to display the generated images in response to this prompt due to a violation of their user guidelines regarding the mention of a well-known designer and illustrator’s name.

This is maybe the biggest grey area with generative AI’s capacity for “creative” work. Other platforms allow unlimited permutations of images that take “inspiration” from existing designers and artists without necessarily compensating them.

To their credit, while Midjourney and Leonardo didn’t reject the inclusion of Leo Natsume within the prompt, their outputs remained significantly different from his work as you can see here.

Naming visual references is a great tool to fine-tune AI prompts – and used responsibly, feels adjacent to gathering references manually. But there’s a clear potential to misappropriate intellectual property — and a responsibility not to.

—————-

The only way we could make this work with Adobe Beta’s Firefly integration was by removing the designer’s name from the prompt. And the results are…well. Ah. Nowhere near as good. But maybe there’s an idea buried somewhere here? If you like slightly jellified squares?

On the other hand, using Firefly natively (through the platform, rather than Adobe’s beta integration) gave us this even more unusual image. It doesn’t exactly scream landing page, but there’s some interesting abstract colour and shape work that again, could be the ember that starts a new creative idea.

Test 3 summary:

The third test confirmed that generative AI could give designers a jumping off point for creative pathways.

Once again, Midjourney led the pack, highlighting the importance of specific and detailed prompts in obtaining favorable results. The use of flowing shapes and the textures of those shapes gave a nice tangibility to the design.

Photoshop (Beta) with Firefly AI integration faced limitations due to user guidelines. Once the designer’s name was removed it provided some rough, intriguing results that could spark new ideas. But if we’re being fair across the tests and focusing on the Photoshop integration, it simply couldn’t deliver.

While Leonardo demonstrated some interesting ideas, it fell slightly short of Midjourney’s performance. However its bold colours and the use of 3-dimensional perspective certainly made it a strong choice for this kind of work as its best use case.

Ultimately, the choice of generative image platform depends on the specific requirements of each project and the desired outcomes; the strengths and weaknesses of each platform are baked into what you ask it to do.

Taking stock

So there you have it: Midjourney, Leonardo and Photoshop Beta’s Firefly integration put through their paces on specific, tangible creative tasks to test their impact on design creatives’ workflows.

Over the three tests, there was a clear standout in Midjourney; in all three, it delivers usable, early ideation that would save time for a busy designer.

Photoshop Beta’s Firefly integration is an interesting case. With limitations in place concerning the data it is trained on and the prompts that it will (or will not) follow, it may seem hamstrung. But these regulations minimise the chance of accidentally crossing into ethically muddy waters, and where it did deliver, the results were impressive.

Finally, Leonardo does take home the wooden spoon for this set of specific tasks. It struggled to generate a cover for a whitepaper and produced some truly lovecraftian stock imagery — though it does though seem to have a particular niche for early design ideation, showcased by its strong showing in the final task.

Taking our earlier tests into account there are a few things to keep in mind:

1. Limitations in text rendering: As observed in the first test, most generative image platforms may struggle with rendering text effectively. This limitation should be considered when generating images that require accurate and visually appealing text elements. It’s important to review and refine the text output to ensure its quality meets your expectations.

2. Variation in output quality: While generative image AI platforms can output impressive results, the quality wasn’t consistent across Midjourney, Leonardo, or Photoshop Beta’s Firefly integration. Different platforms may excel in specific tasks, but it’s crucial to assess and compare the results carefully to ensure they align with your project requirements.

3. Potential off-target results: The second test revealed that generative image AI platforms, such as Photoshop Beta’s Firefly integration and Midjourney, may produce outputs that deviate from the original image or prompt. While this can lead to alternative and interesting ideas, it’s important to be prepared for off-piste results that may not align with your initial vision. Regular evaluation and adjustment may be necessary to refine the generated images as per your specific requirements.

4. User guidelines and limitations: The third test highlighted that certain generative image AI platforms, like Photoshop Beta with Firefly AI integration, may have strict user guidelines regarding the generated content. In some cases, violation of these guidelines could restrict the visibility or usage of the outputs. It’s essential to familiarize yourself with the platform’s guidelines and restrictions to ensure compliance and avoid potential issues.

5. Potential bias and lack of originality: As with any AI system, there is a risk of unintentional bias in the generative image outputs, especially if the training data contains biased or limited representations. Additionally, while generative AI can provide alternative ideas, it may not always generate entirely original concepts. To maintain originality and mitigate bias, it’s crucial to incorporate human creativity, critical evaluation, and ethical checks in the design process.

Best practice in an ever-changing domain

There’s a lot of information to consider in this blog. To that point, we thought long and hard about a set of clear-cut rules to bear in mind when deploying generative AI in your marketing artwork (in addition to the ethical checklist). We settled on the following:

- Understand the limits and ethical implications – Midjourney, Leonardo or Photoshop Beta with Firefly integration won’t save you the salary of a twenty-year-deep creative veteran, or even the person that started a year ago; it can’t produce original thought and it will make mistakes. Apply it small-scale, practically and always have a creative entrenched in the project.

- Use the tool to spark new ideas and creativity – generative AI is fantastic at spitting out a ton of ideas and it’s precisely for this reason that it works well when designers hit a block. At this stage, it’s a fantastic tool to give creatives a jumping off point to explore new angles and ideas.

- Strike a balance between authenticity/creativity & enhancement – because anyone with eyes and ears will know when something hasn’t originated from a creative mind.

- Be specific, be smart – sometimes there just aren’t enough hours in the day. An early-stage content discussion could do with some pizzazz but you can’t spare a designer knee-deep in other projects. Using generative AI for rough early ideation and grunt work is the way to go.

Looking ahead

It’s not just marketing that is staring down a reckoning with artificial intelligences and generative large language models. From film and music to law and technology, there is a race between increasingly sophisticated machinery and those that seek to understand, use or limit it.

The biggest issue for now is the speed of this area’s development; in the space of a few years we’ve seen unimaginable leaps forward. And that’s not going to stop. For anyone within a creative discipline, we’ll need to evolve our relationship with these tools and stay aware of their development.

As for the rest of the generative AI discussion, well that’s bigger than a blog. Or three.

Enjoyed this article?

Take part in the discussion

Comments

There are no comments yet for this post. Why not be the first?